Data-Driven success: tailored consulting for your needs

Our role in data science consulting is unrivaled, with over 300 successful projects executed. We offer integrated data science solutions to our clients for all their requirements, from foundational strategies to sophisticated enterprise-level applications. Let's partner in tailoring your data science project to your needs!

CASE STUDY

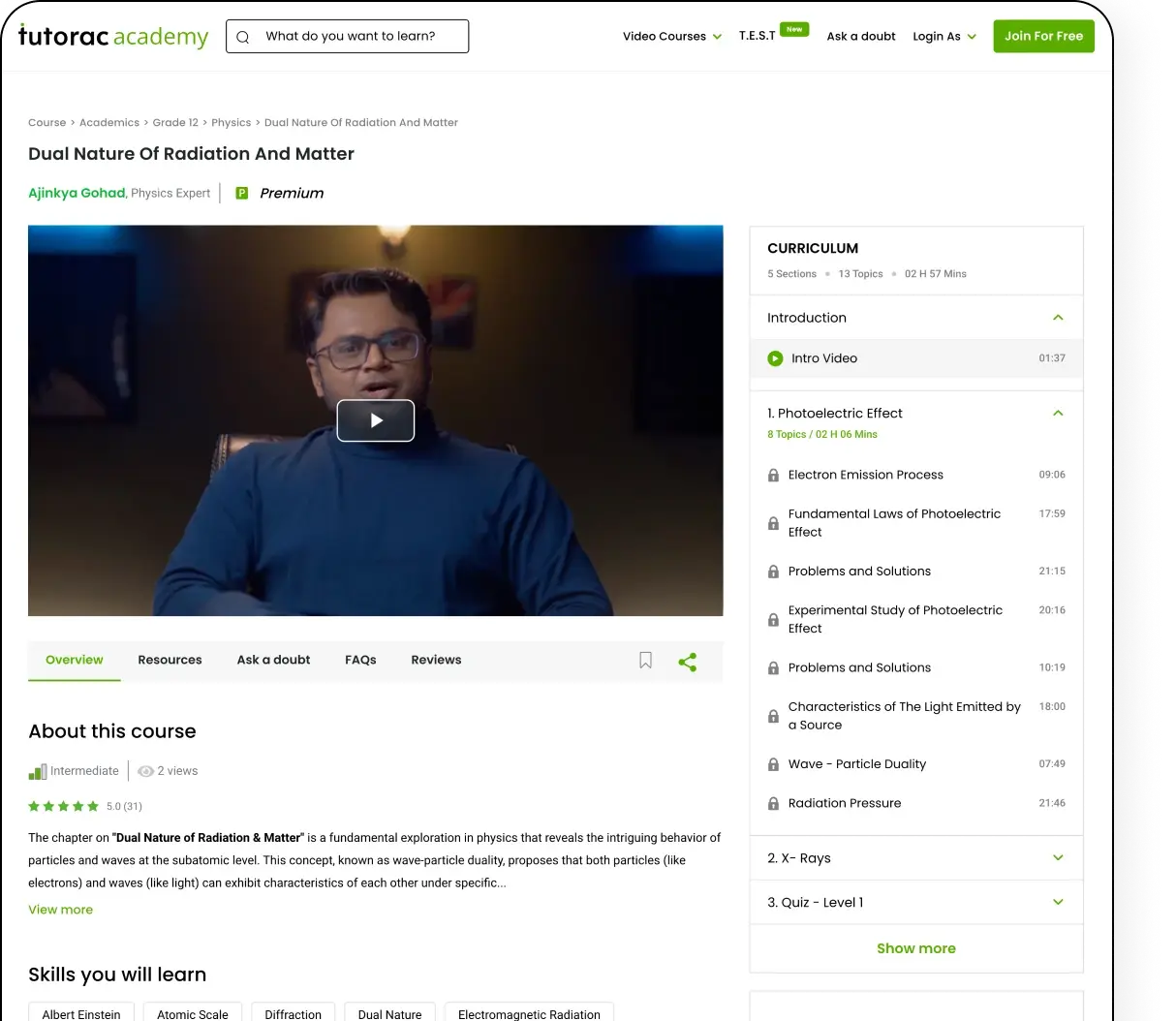

Tutorac Academy is a cutting-edge Learning Management platform, where learning meets innovation!

How data science consulting services can benefit your business?

- Data Acquisition and Engineering

- Data Analysis and Modeling

- Advanced Analytics Techniques

- Data Migration

- Cleans, transforms, and prepares raw data for analysis, ensuring data quality and accessibility.

- Extracts insights from data using statistics, machine learning, and visualization tools to identify trends and patterns.

- Leverages cutting-edge techniques like natural language processing and computer vision to unlock new possibilities for data analysis, especially for unstructured data.

- Moves data from various sources to a centralized data warehouse or platform, creating a foundation for analysis.

DO YOU KNOW ?

Companies that make use of customer analytics are 23 times more likely to outperform competitors in customer acquisition according to McKinsey!

Excellence: The standard for our client deliveries.

With over 30 awards and accolades, our achievements showcase our quality and commitment to client success.

Maximize your results with our expertise in data science

Python + NumPy + Pandas + SQL:

This combo is essential for handling, cleaning, and analyzing data. NumPy and Pandas provide powerful tools for data manipulation, while SQL is crucial for querying and managing databases.

Python + Scikit-learn + XGBoost

A popular combination for classical machine learning, Scikit-learn provides a range of algorithms, while XGBoost is known for its performance in gradient boosting.

Python + TensorFlow + Keras

Ideal for deep learning projects, TensorFlow and Keras offer a comprehensive platform for building neural networks.

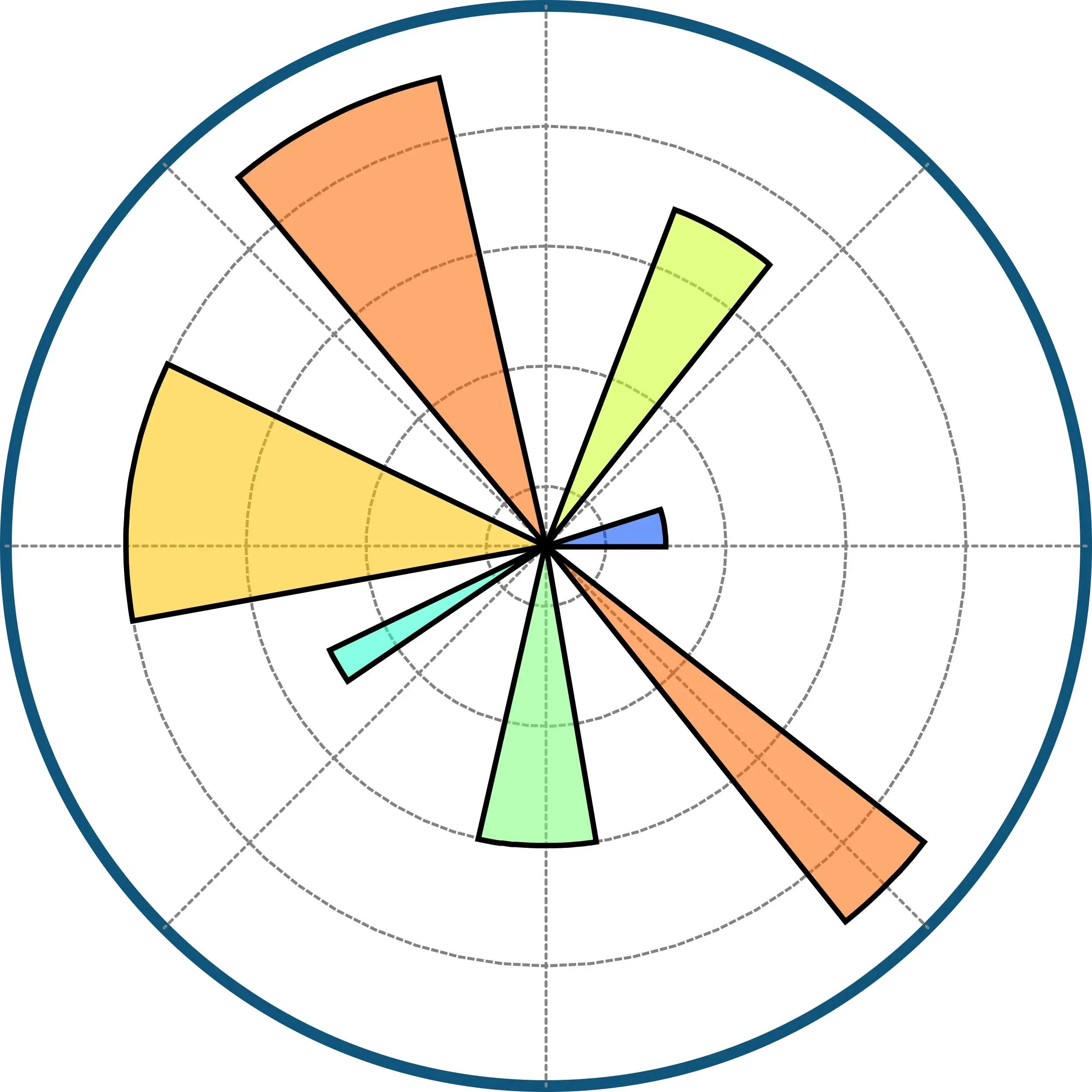

python + Matplotlib + Seaborn

This combo is great for creating static and complex visualizations, with Seaborn built on top of Matplotlib to offer a high-level interface.

R + ggplot2

ggplot2 in R is known for its flexibility and layered grammar of graphics, making it easy to create complex plots.

Python + Airflow + Apache Kafka

This combo is used for managing data workflows and streaming data, with Airflow handling scheduling and Kafka managing real-time data streams.

Python + Jupyter Notebook + Anaconda

A comprehensive environment for data science, where Anaconda simplifies package management, and Jupyter Notebook provides an interactive coding platform.

Projects powered by our development team.

GetLitt!.

e-Book Reading App for Kids with Gamification

- Game

- India

Getlitt: Best online book reading app for kids. Let your child discover a magical world of imagination, knowledge and inspiration. At GetLitt! We make reading fun.

- ReactJS

- NodeJS

- SQL Server

- Redis

Tutorac Academy.

Online Learning Platform

- EdTech

- India

Tutorac Academy is a cutting-edge Learning Management platform, where learning meets innovation.

- ReactJS

- NodeJS

- SQL Server

- Redis

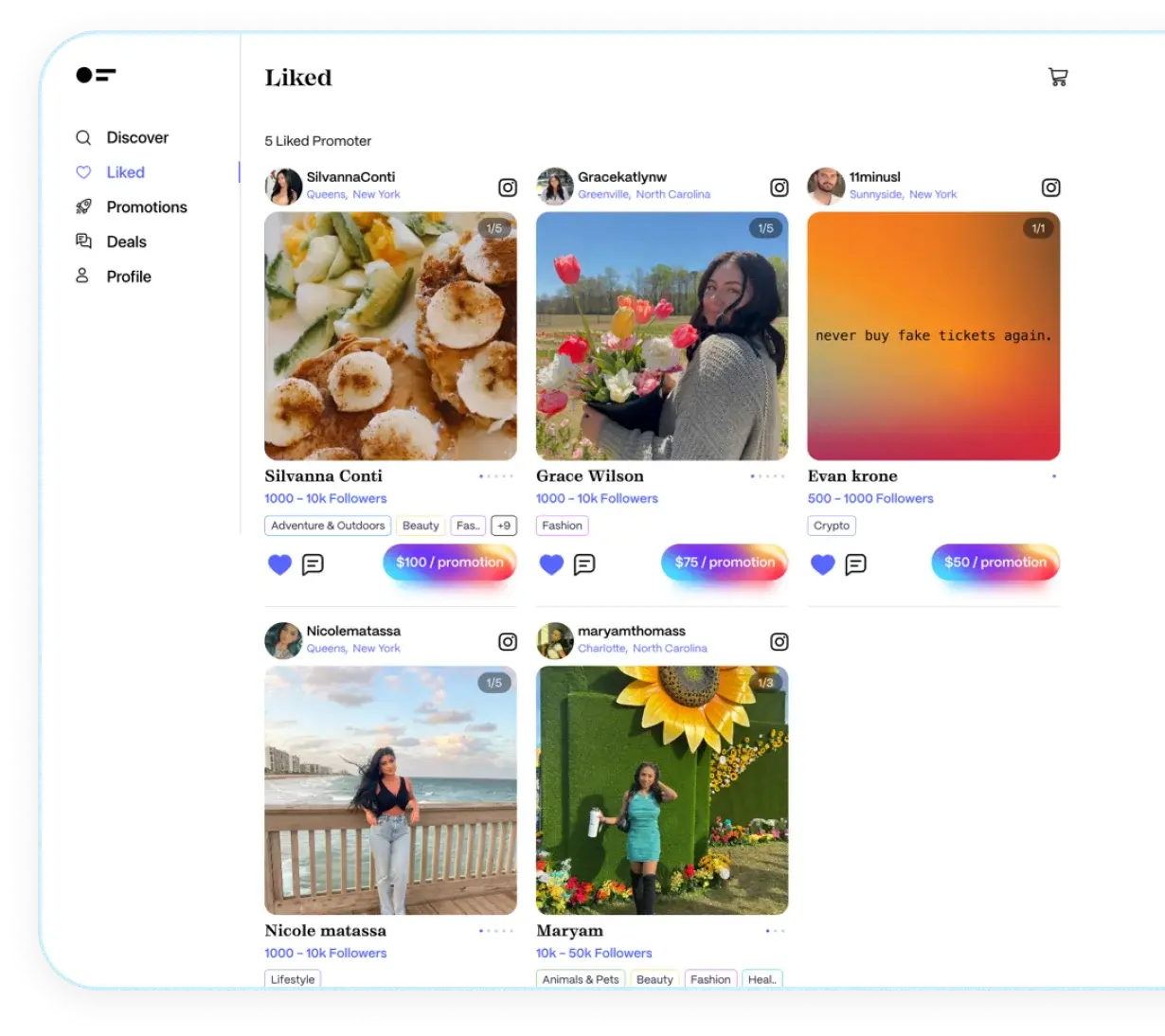

Userpromo.

Influencer marketing service finder platform.

- Marketplace

- US

A platform for brands and content creators to connect and collaborate.

- ReactJS

- NodeJS

- SQL Server

- Redis

Choosing the right data science solution: expertise in tailored analytics

You need to decide upon the best data science strategy to unlock useful business insight and drive success for your projects. We at Techuz specialize in developing customized data science solutions by designing and implementing models that will suit your specific business goals.

Our competence is wide-ranging in various data science techniques—from advanced statistical analysis to machine learning algorithms and deep learning models. We use these methods to extract insights, predict trends, and optimize processes that ensure actionable results relevant to your needs.

On the fronts of excellent performance and integration, we make use of essential tools like Jupyter Notebooks for interactive analysis of data, Scikit-learn and TensorFlow for constructing and training models, data visualization libraries, Matplotlib and Seaborn for elegant and intuitive presentation of your findings. We mix the most recent developments in data science technology to empower high-impact, customized solutions to business problems, armed with correct and actionable data insights.

Our in-house team currently has more than 50+ dedicated data scientists and a track record of over 250+ completed projects successfully, we bring deep expertise to every data science project. From USA to Australia and the UK, our projects cover the length and breadth of the globe. We excel in delivering exceptional value through cutting-edge analytics, predictive modeling, and insightful data solutions. Our commitment to quality and an understanding of the client need in-depth position us as a first-choice provider of data science services. Trust us to turn your data into prescriptive insights and drive successes with high-performance, customized data science solutions that excel in today's dynamic market.

Engagement models for our data science development services

Outsource development

Share your project idea with us, and we'll manage the entire development process for you. At Techuz, we simplify the journey from concept to completion. Here’s how it works:

Share your project requirements![Initial Consultation & Proposal]()

1. Initial Consultation & Proposal

Share your project idea, goals, timeline, and specific requirements with us. We will conduct a detailed analysis, assess the scope, and outline our approach. You will receive a comprehensive proposal with our strategy, timeline, and costs.

![Development & Quality Assurance]()

2. Development & Quality Assurance

We kick off the development process, providing regular updates and milestones. Our team conducts rigorous testing to ensure functionality, security, and reliability throughout the development phase.

![Delivery & Post-Launch Support]()

3. Delivery & Post-Launch Support

Upon completion, we deliver the project for your review and address any final adjustments. Post-launch, we offer ongoing support for enhancements or maintenance to ensure the continued success of your project.

The data science ecosystem we use in our projects.

Languages

Databases

Libraries and Packages

Cloud Platform

Techuz is one of the best development and IT firm in the world.

And here’s what our clients say about us

Key trends in data science for future-proofing your solutions

Fast-evolving data science is realizing major leaps in how it does analysis and work with data. We at Techuz never let these trends get ahead of us to enable your data science solution to stay on top and future-ready.

Latest insights on the data science

Similar technologies

Frequently

Asked Questions

A key data science project would normally consider the following stages of the project

Data Acquisition: Obtain relevant data from various sources, including but not limited to databases, APIs, web scraping, etc.

Data Cleaning and Transformation: Treating missing values, outliers, and inconsistencies to make the data suitable for analysis.

Model Building: Build and train machine learning or statistical models through the use of algorithms like regression, classification, clustering, or deep learning.

Model Evaluation: Perform a thorough workout on the performance of the model using metrics like accuracy, precision, recall, and F1 score to give room for doing a readjustment in time

Deployment: Implementing a model in some working environments and ensuring the model works correctly at the setting.

Monitoring and Maintenance: Continuously monitoring the model performance and updating it whenever new data comes or based on feedback.

In such large-scale data analysis processing, we have the overall help of distributed computing frameworks like Apache Spark and Hadoop; it helps in processing data on a big scale effectively. We also leverage cloud-based platforms like Google Cloud, AWS, and Azure to access scalable storage and computing resources for dealing with big data challenges.

Different algorithm and technique bases of machine learning used in our implementations are as follows:

Supervised learning, including regression, classification, and ensemble methods (for example, random forests and gradient boosting),

Unsupervised learning, including clustering (K-means and DBSCAN) and dimensionality reduction; for instance, PCA and t-SNE; and deep learning, which leverages neural networks, convolutional neural networks for processing images, and recurrent neural networks for sequential data.

Data quality and integrity are maintained using the following pre-processing procedures:

Data Validation: Check and see if the data are accurate, consistent, and complete.

Data Transformation: Normalize, scale, and encode data in preparation for modeling.

Handling Missing Data: The imputation method will be used to take action regarding missing data in an attribute, or otherwise, extend the effect of missing data on record deletion.

Outlier Detection: Detect and handle any outliers to avoid any bias toward the results.

We use the following advanced tools and frameworks in our data visualization and reporting:

Python Libraries: Matplotlib, Seaborn, and Plotly, supporting a wide variety of use-cases in Static and Interactive visualizations.

Business Intelligence Tools: Tableau, Power BI supporting the development of interactive dashboards and full-fledged reporting.

Jupyter Notebooks: The platform that allows visualization and live code to coexist with the narrative text interactively.

To make sure that our solutions are scalable, we are doing the following:

Using Cloud-Based Solution to make sure that storage and compute is scaled accordingly.

Containerization: Running all our models on containers using Docker have consistency on all environments from development to test and to production.

Microservices Architecture: Design applications as a set of microservices supporting independent scaling of components in response to demand.

We train and deploy our custom LLMs using state-of-the-art tools and libraries like TensorFlow, PyTorch, or Hugging Face Transformers. These platforms will have stringent potentials for training and deployment of large-scale language models, ensuring absolute compatibility with the state-of-the-art techniques.

We develop and deploy AI models using a broad spectrum of tools and frameworks: TensorFlow, PyTorch, Scikit-learn, Keras, among others. Such platforms can provide the capability for building, training, and optimization of complex models by ensuring alignment with industry standards in all aspects.

Inquiries.

[email protected][email protected]Reach us at.